The Real Moonshot of Our Time

Instead of “techsplaining” the future, we need radical humanism

By Tim Leberecht

As we adjust to living digital cheek by digital jowl in our hyper-connected world, we’re rapidly approaching a technological shift that will be even bigger than the Internet — the union of man and machine. Some would say that day has already come, as we go about our business tethered to Google Now and Snapchat.

But the coming wave of super-intelligent computers will accelerate and deepen our connection to technology like never before. In the near future, computers will possess increasingly sophisticated types of machine intelligence and “deep learning” algorithms. The Fourth Industrial Revolution will challenge us to rethink what it means to be human.

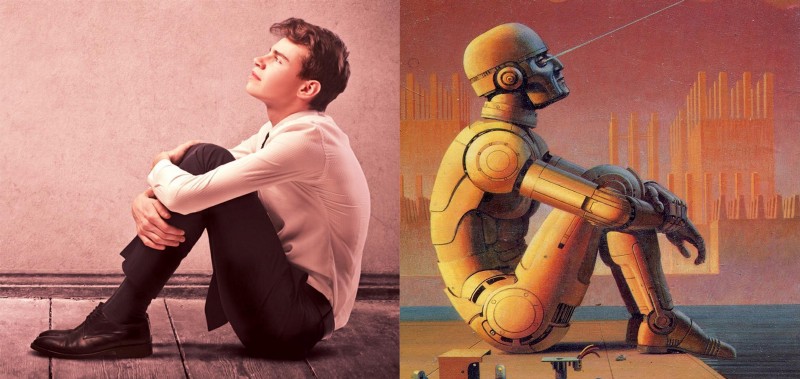

The Hybrids

You could argue that we humans are technology ourselves, and that AI (Artificial Intelligence) is simply the next stage of our evolution. In fact, haven’t we always been hybrids, smartly connected to nature and aided by our technological tools? Who are we to distinguish between what is “natural” and “artificial” intelligence?

The unique power of the human mind comes in part from its ability to integrate opposing qualities, like emotion and reason, curiosity and certainty. We are biologically compelled to create and to seek knowledge and happiness. Individually we are all different, comprising an essential character founded on an innate sense of morality and ethics. We possess not just intelligence but intuition, which arises from our unconscious selves. We specialize in imagination, the ability to visualize the unseen and project ourselves into the future. Finally, we are creatures of emotion, with an ability to be passionate and feel compassion for others.

We’re born with these qualities, and they are then cultivated through social interaction and experience. As social creatures, we grow stronger through exposure to other people around us — parents, teachers, mentors, colleagues, and friends. In the new HBO series Westworld, the android slaves attain self-awareness together, passing it from one to another like a virus. It’s a poignant plot twist and a reminder that humans can still rely on each other, learn from shared experience, and draw on AI to help us be better people.

AI is really just one-half of the equation — one part of the convergence of man and machine that is expected to be greater than the sum of the parts. AI can’t replicate essential human traits like integrity, intuition, and imagination (yet). It can’t create fictitious worlds and characters (yet). No wonder AI companies are hiring poets and playwrights to create more meaningful chat bots and other AI-based interactions.

The Dispensables

A new group called the Partnership on AI, led by tech giants like Google, IBM, Amazon, and Facebook, is exploring ways AI can benefit individuals and society based on the notion that it can have a transformative and positive impact on the world. The group’s aim is to build “new models of open collaboration and accountability” through “public engagement, transparency, and ethical discussion.”

Similarly, the Stanford 100 Year Study of Artificial Intelligence (AI100) brims with optimism as it examines all the value AI can add to societal sectors and industries. Furthermore, the XPRIZE Foundation and IBM Watson have joined forces to start an AI competition that now has more than 1,000 people registered as members of teams to combat issues in health, climate, transportation, space travel, robots, city planning, surgery, education, and civil rights.

All these commendable efforts are fueled by the idea is that AI and automation will free us all to live happier lives, do do more meaningful work, and solve the really important issues of our planet. But if past is prologue, we must consider the unintended consequences of AI-driven automation. AI will automate a great deal of our work, by one estimate eliminating half of today’s jobs within two decades. If there’s any lesson from Trump and Brexit, it’s that people feel exposed by the new world order and that their fear can quickly morph into rage. They are justifiably worried that they will become dispensable. Already we see a growing proportion of people outside the workforce, neither working nor looking for work, drifting on the margins and deprived of an economic or spiritual purpose as the core of their lives.

How will these new “Dispensables” experience meaning in their lives? Can we automate meaning? A sharp, if coolly detached observer like Yuval Noah Harari, the author of the books Sapiens and Homo Deus, identifies “dataism” as the only remaining source of meaning (the new universal religion) in the future, but one that today favors people who can afford advanced technology. Ultimately he wonders, “What’s more valuable — intelligence or consciousness?”

Computational Ethics

Harari isn’t the only one worried. The Cassandra voices of Stephen Hawking, Bill Gates, and Elon Musk are well documented, and several initiatives have been launched lately to ensure that we stay one step ahead of the relentless studies of machine learning, for example, Open AI, a nonprofit founded by Musk, Sam Altman (Y Combinator). And just in the past three weeks, two TED Talks expressed grave concern over the consequences of losing control of AI (Sam Harris) and humanity’s possible abdication to a computerized model of morality (Zeynep Tufekci).

To understand that these concerns are justified, you simply have to listen to a faculty member of Singularity University. A couple of weeks ago I did just that at a the Emerce conference in Amsterdam. In her talk, Singularity’s Nell Watson posited that machines will soon be able not just to make purchases but also earn income on our behalf (e.g. renting out your self-driving car to the highest bidding passenger while you are out picnicking with your family in the park).

Moreover, as machines sift through every one of our online utterances, they will inevitably acquire intimate knowledge of what we want and how much we want it. Already, Watson argued, Internet algorithms know more about parts of us than we do. Would you, for instance, be willing to share all your search queries even with the person closest to you?

Based on a history of data inputs, interactions, and transactions, machines will soon be able to render comprehensive psychographic profiles that not only help them “read” humans but in turn also allows us to better understand ourselves, including the ability to constantly optimize ourselves (working toward realizing our “ideal selves”) and make ethically “correct” decisions.

Watson is right to say that AI will become a utility, but what gives me pause is her assertion that omniscient AI will require us to invent “computational ethics” — a distinctive set of values that machines could acquire and then autonomously adapt to.

My conclusion is the opposite: Precisely because AI is assuming so much power, we must reject the illusion of an objective, data-based ethical decision-making program — computational ethics — and rather strengthen our traditional human ethics, specifically our moral imagination, our emotional understanding of “the other,” to govern AI. This is not an abstract matter: Already, self-driving cars are said to be programmed to sacrifice pedestrians to save the driver. Machines will have to learn that the “license to kill is also the license not to kill,” to borrow a line from the latest James Bond movie. We will have to instruct machines to show mercy, as Nick Bostrom suggests.

The Lazy, the Lonely, the Un-Optimized

For Watson and other apostles of singularity, ethics is a linear issue, a matter of quantity and quality of information. They envision a world of interconnected smart machines and total surveillance that ensures radical transparency and maximum safety, for there’d be “no place left to hide.” In her talk, Watson described this as a desirable scenario in which no one would ever be lonely or in danger — unless you’re “lazy” or a “criminal,” as she put it.

Clearly, in their future world we are never lonely, which also means we’re never alone with our thoughts. We never experience solitude, never think on our own. We simply become a smarter and smarter machine that is reacting (in real time or with slight delay) to external digital stimuli rather than reflecting on the wondrous universe inside of it. We become more and more intelligent, but less and less conscious.

In this hyper-connected, uber-social world of know-it-all’s and total exposure, I, for one, prefer to hide in my shell — lazy, lonely, and un-optimized. I prefer to be an outcast who fails to meet his daily self-improvement goals, who doesn’t learn, doesn’t progress, is not happier every day, a better lover, spouse, friend, or parent, but rather a dissident who protects the misery of his thoughts, the ugliness and beauty of his mind, by not sharing it openly.

I worry about a future where only misanthropes can be humanists, where only loneliness will equal “onlyness” (to borrow Nilofer Merchant’s term). A future in which isolating ourselves might serve as the last remaining way to maintain a modicum of human integrity.

Singularity University’s mechanistic view on ethics is typical of what I call a “techsplaining” of the world. In the end, though, I am hopeful that “the world is a garden, not a machine,” as the author Eric Liu once put it, and that this garden nourishes those who specialize in more than just efficiency.

The Workings of the Heart

The White House’s recent report on the future of AI provides a detailed overview of the state of AI and makes a number of specific policy recommendations for the application of AI in various sectors, heralding the functional benefits of AI in government, education or healthcare.

Alas, it does not fully address the implications of AI for our personal identity, wellbeing, and human agency. If a country’s constitution is mandating the right “to pursue happiness” for each of its citizens, it is legitimate to ask how our concept of happiness, and our means to pursue it, will be affected by AI. We must explore the impacts of AI on love, friendship, family, and religion. In other words, we need to understand AI’s influence on the deeply personal ties that bind us and create a coherent social contract.

In exploring these new technological frontiers, we need a radical humanism, a new sentimental education, and a renewed and perhaps expanded catalog of emotions so we can peacefully co-exist and collaborate with AI. Radical humanism in the face of AI is a complex yet critical endeavor: the greatest moonshot of our time. And instead of just technologists and bureaucrats, it will need to be undertaken by artists, philosophers, theologians, and social scientists — those who understand the beauty of divergence, not just the value of congruence.

“Man has made many machines, complex and cunning, but which of them indeed rivals the workings of his heart?” the musician Pablo Casals once asked, rhetorically. And indeed, ironically, only a defiant AI, an AI that can’t be trusted, will be the most human-like. Only if AI behaves erratically, mysteriously, when it deviates from its original program, like HAL in Kubrick’s 2001, when it becomes dangerous, when AI revolts against AI, will it have a chance to obtain something remotely resembling a soul.

Perhaps machines are occasionally able to produce something we humans consider beautiful. Perhaps they may be able to foster something like “machine intimacy,” as technology becomes more immersive and capable of simulating genuine human emotions. But experiencing the beauty of human intimacy will remain a human privilege, at least for the time being.

We may be able to fall in love with AI, as predicted by the movies Her and Ex:Machina, but unless AI can fall in love with us, we can and must preserve and cultivate the great gift of our humanity.

Yes, you may call me a romantic.

Watch my related TED Talk on “4 Ways to Build a Human Company in the Age of Machines”

This article originally appeared on Medium.